Lemmy itself and then run any of the importers I guess it would be really straightforward

I like sysadmin, scripting, manga and football.

Lemmy itself and then run any of the importers I guess it would be really straightforward

Ubuntu is like all other Linux distributions, they add to fragmentation.

Everyone should run Arch Linux

I use arch linux btw

Yeah but I assumed this is about providing the gpu with any user defined amount of ram

From what I read online this only works for integrated cards?

If you expect your IT cousin/uncle/brother hosting the family immich/nextcloud to not be a trusted person in regards of bad actors your issue is not exclusive to selfhosting.

That’s like saying a farmer will put cheese on a piece of cardboard for the mice to eat.

They might eat it yes, but that wasnt the reason for the whole interaction to start. The glue around the cheese was.

It’s supposedly faster/snappier loading on large rooms. But if you are self-hosting a single user instance, you might not notice much improvement.

I was also running the dendrite but I gave up because it seemed like development was stalled so I moved over to Synapse.

Note that most wireguard clients wont re-resolve when the dns entry changes and they will keep silently a failed tunnel so you would have to do some measure to periodically restart the tunnel.

Is x266 actually taking off? With all the members of AOmedia that control graphics hardware (AMD, Intel, Nvidia) together it feels like mpeg will need to gain a big partner to stay relevant.

For an old nvidia it might be too much energy drain.

I was also using the integrated intel for video re-encodes and I got an Arc310 for 80 bucks which is the cheapest you will get a new card with AV1 support.

Better than anything. I run through vulkan on lm studio because rocm on my rx 5600xt is a heavy pain

Ollama has had for a while an issue opened abou the vulkan backend but sadly it doesn’t seem to be going anywhere.

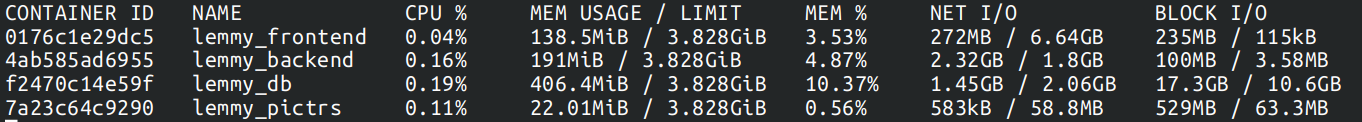

Put up some docker stats in a reply in case you missed it on the refresh

For the whole stack in the past 16 hours

# docker-compose stats

Depends on how many communities do you subscribe too and how much activity they have.

I’m running my single user instance subscribed to 20 communities on a 2c/4g vps who also hosts my matrix server and a bunch of other stuff and right now I mostly see peaks from 5/10% of CPU and RAM at 1.5GB

I have been running for 15months and the docker volumes total 1.2GBs A single pg_dump for the lemmy database in plain text is 450M

Yep I also run wildcard domains for simplicity

I do the dns challenge with letsencrypt too but to not leak local dns names into the public I just run a pihole locally that can resolve those domains

What is your budget?

i5-7200U

That is Kaby Lake and seems to support up to HEVC for decoding which might be enough for you

https://en.wikipedia.org/wiki/Intel_Quick_Sync_Video#Hardware_decoding_and_encoding

For a bit of future proof you might want to check out something Tiger Lake or newer since it seems like they support AV1 decoding in hardware.

I dont think there’s anything that seamless integrate on keyboards or social/chat apps but you could try to selfhost a booru app and then share the hotlinks to those gifs.

They are mostly known for anime and weeb stuff but for memes and gifs I think the tagging system would work the best so you can quickly search what you want.