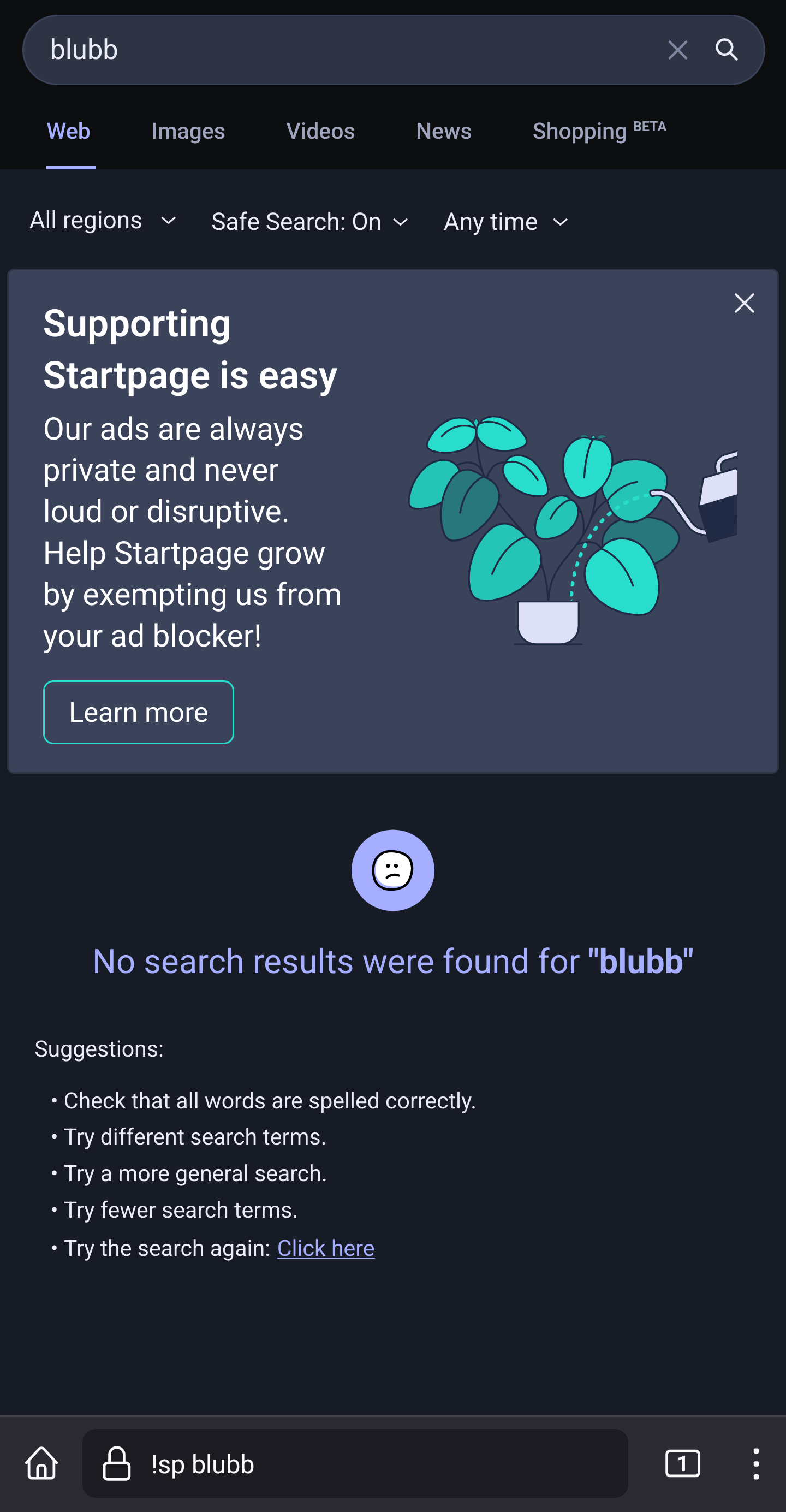

Is it just me or are many independent search engines down? Duckduckgo, my go to engine, qwant, ecosia, startpage… All down? The only hint I got was on the qwant page…

Edit: it all seems to be related to bing being down. I hope the independent engines will find a way to get really independent…

Yandex is still up

Removed by mod

Well it ses that bing is down too, and most independent search engines are a warper arround bing so…

*wrapper

*rapper

You gotta believe!

“independent”

lol how could they all go down at the same time

I have no idea, but I can’t LOL…

Seems to be related to bing using AI now…

Yet, DuckDuckGo and Startpage seem down, too. Even though DDG supposedly uses its own results, and SP supposedly is a FE for Google

Has ddg ever really claimed to use its own index? I’ve always thought of it being a bing frontend

edit: Apparently start page pulls from Google and Bing (going off of this)

I recall that DuckDuckGo bought data from Yahoo and then used that on its own, but if that was ever true it no longer is:

Of course, we have more traditional links and images in our search results too, which we largely source from Bing.

https://duckduckgo.com/duckduckgo-help-pages/results/sources

“We’re totally not just rewrapping Bing results!!!”

That’s what that is. Shit.

Well, brave search works. Any others?

I use an instance from http://searx.space almost exclusively.

Kagi works too

I’m liking Kagi at first blush, thanks for the tip

one of the nicest (and controversial) tools is the option to have AI summarize a webpage. I hate it and love it at the same time

We love to hate AI because it is overvalued, at the same time I’m currently 90% done with my work week thanks to some cheeky AI use. Guilty pleasures.

I find it quite ethical. Its fastGPT protocol gives hyperlinks too all of it sources used. Its like AI without thw stealing.

Bing is down, and every engine that uses bing api.

Wait it’s all bing? 🌎👨🚀🔫👨🚀

Always has been. Chk chk

Its about time we make a federated search engine and indexer

I’ve also thought about this, but I don’t know what would be the costs to do such a thing. (I’m ignorant on the subject)

Isn’t that searx / searxng?

SearXNG is a fork from the well-known searx metasearch engine which was inspired by the Seeks project. It provides basic privacy by mixing your queries with searches on other platforms without storing search data. SearXNG can be added to your browser’s search bar; moreover, it can be set as the default search engine.

SearXNG appreciates your concern regarding logs, so take the code from the SearXNG sources and run it yourself!

Add your instance to this list of public instances to help other people reclaim their privacy and make the internet freer. The more decentralized the internet is, the more freedom we have!

Not at all. This just searches multiple search engines at once and presents you with the results from all of them on a single page.

Dogpile.com is back

Dogpile.com is still there, actually.

Aw, hell yes

I was thinking about this and imagined the federated servers handling the index db, search algorithms, and search requests, but instead leverage each users browser/compute to do the actual web crawling/scraping/indexing; the server simply performing CRUD operations on the processed data from clients to index db. This approach would target the core reason why search engines fail (cost of scraping and processing billions of sites), reduce the costs to host a search server, and spread the expense across the user base.

It also may have the added benefit of hindering surveillance capitalism due to a sea of junk queries from every client, especially if it were making crawler requests from the same browser (obviously needs to be isolated from the users own data, extensions, queries, etc). The federated servers would also probably need to operate as lighthouses that orchestrate the domains and IP ranges to crawl, and efficiently distribute the workload to client machines.

The theory with crawling is it has discovery built into it, no? You follow outbound links and discover domains that way. So you need some seeds, but otherwise you discover based on what other people already know about.

To me the problem seems like a few submarines in a cave. They can each see a little bit of what’s around them, and then they can share maps. Like the minimum knowledge of the internet is one’s own explorations. As one browses the web, their sensors are storing everything they see. It also actively searches with other agents, automatically crawls on its own like active sensors on a submarine always mapping out the environment.

Then, in the presence of other friendly subs, you can trade information. So one’s own personal and small map of the internet can get merged and mixed with others to get a more and more complete version.

Obviously this can be automated and batched, but that’s sort of the analogy I see in the real world: multiple parties exploring an unknown/changing space and sharing their data to make a map.

Shit man thats exactly the kind of implementation i was thinking about. Had the idea for a couple years now but now that the fediverse is starting to gain traction i think it’s probably about time some code gets written. Unfortunatly due to CORS u cant just start serving people a js script that starts indexing in the background.

Smth like yaCy?

Looks interesting why have i never heard of this. Are there any websites that rank search engines to get some concrete metrics?

idk about any raking sites, but from what I understand, YaCy gets better the more ppl participate in it

other ppl already mentioned Searxng which is also great

Thats what im currently using but its a meta search engine and still relies on everyone else’s proprietary crap.

Google doesn’t

Kagi works fine 👍

They have meta engines that search instances of searx

Kagi works fine.

Yandex is working

Well isn’t that great, mr. moneybags

/S

Yes I’m making a stupid joke bc it’s a paid search engine, something i never would have imagined would be a thing. Now I’m going to price it and perhaps finally pull the trigger on an acct there because fuck google, fuck bing, and fuck all these sites being “wrappers” of them. I come from the dial-up days when lycos, webcrawler, altavista, yahoo–hard searches that would separate the men from the boys. Now we just get this “A.I.” bullshit instead and it falls apart under its own weight. Excuse me while i wave my cane at the sun ☀️

I was there too. Do try the freebies and see how you like it, there’s nothing to lose. Personally, that convinced me and I switched to 300 searches, then I got a new job for which I was making lots of searches and outgrew it so now I’m on the unlimited. IMO ultimate is useless unless you really like the vision or something, I just pay for a working product.

I hope Apple will finally allow custom search engines because the current workaround when wanting to use Kagi as default in iOS Safari is a shitty user experience (and I’m not blaming Kagi for that)

Lol wow. It is 2024 and apple still doesn’t give you basic browser v1.0 functionality

I have never used iOS but I’d guess that makes browsing on it a little less convenient than on a terminal with

curl.You’re implying that the first browser was curl? I don’t think people called that a browser. And even if they did, they obviously weren’t using it like we do now.

Obviously not the first but might win a “most basic browser currently maintained” competition if it qualifies (not if HTML rendering is a criterion).

I mean, it’s an http client, I’ll give you that, but I don’t see how it could be considered a “browser” since all it handles is the server interaction

The Bing frontends are down.

DDG was working earlier today, but for the past hour it just throws an error “There was an error displaying the search results. Please try again.”